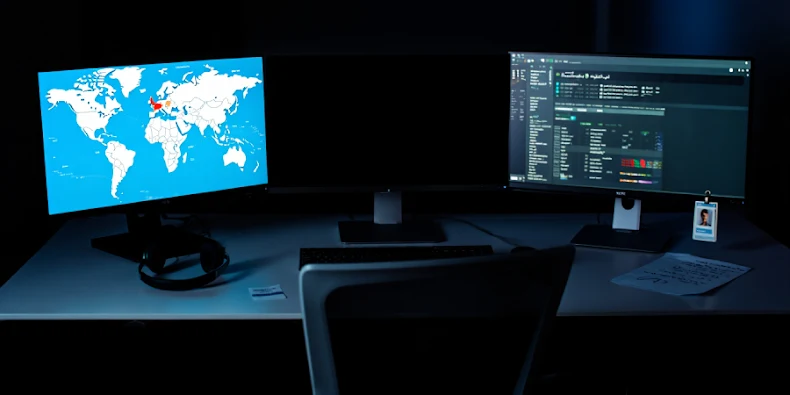

Threat hunters have discerned new activity associated with an Iranian threat actor known as Infy (aka Prince of Persia), nearly five years after the hacking group was observed targeting victims in Sweden, the Netherlands, and Turkey.

“The scale of Prince of Persia’s activity is more significant than we originally anticipated,” Tomer Bar, vice president of security research at SafeBreach, said in a technical breakdown shared with The Hacker News. “This threat group is still active, relevant, and dangerous.”

Infy is one of the oldest advanced persistent threat (APT) actors in existence, with evidence of early activity dating all the way back to December 2004, according to a report released by Palo Alto Networks Unit 42 in May 2016 that was also authored by Bar, along with researcher Simon Conant.

The group has also managed to remain elusive, attracting little attention, unlike other Iranian groups such as Charming Kitten, MuddyWater, and OilRig. Attacks mounted by the group have prominently leveraged two strains of malware: a downloader and victim profiler named Foudre that delivers a second-stage implant called Tonnerre to extract data from high-value machines. It’s assessed that Foudre is distributed via phishing emails.

The latest findings from SafeBreach have uncovered a covert campaign that has targeted victims across Iran, Iraq, Turkey, India, and Canada, as well as Europe, using updated versions of Foudre (version 34) and Tonnerre (versions 12-18, 50). The latest version of Tonnerre was detected in September 2025.

The attack chains have also witnessed a shift from a macro-laced Microsoft Excel file to embedding an executable within such documents to install Foudre. Perhaps the most notable aspect of the threat actor’s modus operandi is the use of a domain generation algorithm (DGA) to make its command-and-control (C2) infrastructure more resilient.

In addition, Foudre and Tonnerre artifacts are known to validate if the C2 domain is authentic by downloading an RSA signature file, which the malware then decrypts using a public key and compares with a locally-stored validation file.

SafeBreach’s analysis of the C2 infrastructure has also uncovered a directory named “key” that’s used for C2 validation, along with other folders to store communication logs and the exfiltrated files.

“Every day, Foudre downloads a dedicated signature file encrypted with an RSA private key by the threat actor and then uses RSA verification with an embedded public key to verify that this domain is an approved domain,” Bar said. “The request’s format is:

‘https://<domain name>/key/<domain name><yy><day of year>.sig.’”

Also present in the C2 server is a “download” directory whose current purpose is unknown. It is suspected that it’s used to download and upgrade to a new version.

The latest version of Tonnerre, on the other hand, includes a mechanism to contact a Telegram group (named “سرافراز,” meaning “proudly” in Persian) through the C2 server. The group has two members: a Telegram bot “@ttestro1bot” that’s likely used to issue commands and collect data, and a user with the handle “@ehsan8999100.”

While the use of the messaging app for C2 is not uncommon, what’s notable is that the information about the Telegram group is stored in a file named “tga.adr” within a directory called “t” in the C2 server. It’s worth noting that the download of the “tga.adr” file can only be triggered for a specific list of victim GUIDs.

Also discovered by the cybersecurity company are other older variants used in Foudre campaigns between 2017 and 2020 –

- A version of Foudre camouflaged as Amaq News Finder to download and execute the malware

- A new version of a trojan called MaxPinner that’s downloaded by Foudre version 24 DLL to spy on Telegram content

- A variation of malware called Deep Freeze, similar to Amaq News Finder, is used to infect victims with Foudre

- An unknown malware called Rugissement

“Despite the appearance of having gone dark in 2022, Prince of Persia threat actors have done quite the opposite,” SafeBreach said. “Our ongoing research campaign into this prolific and elusive group has highlighted critical details about their activities, C2 servers, and identified malware variants in the last three years.”

The disclosure comes as DomainTools’ continued analysis of Charming Kitten leaks has painted the picture of a hacking group that functions more like a government department, while running “espionage operations with clerical precision.” The threat actor has also been unmasked as behind the Moses Staff persona.

“APT 35, the same administrative machine that runs Tehran’s long-term credential-phishing operations, also ran the logistics that powered Moses Staff’s ransomware theatre,” the company said.

“The supposed hacktivists and the government cyber-unit share not only tooling and targets but also the same accounts-payable system. The propaganda arm and the espionage arm are two products of a single workflow: different “projects” under the same internal ticketing regime.”

Source: thehackernews.com…