As IT environments become increasingly distributed and organizations adopt hybrid and remote work at scale, traditional perimeter-based security models and on-premises Privileged Access Management (PAM) solutions no longer suffice. IT administrators, contractors and third-party vendors now require secure access to critical systems from any location and on any device, without compromising compliance or increasing security risks. To keep up with modern demands, many organizations are turning to Remote Privileged Access Management (RPAM) for a cloud-based approach to securing privileged access that extends protection beyond on-prem environments to wherever privileged users connect.

Continue reading to learn more about RPAM, how it differs from traditional PAM and why RPAM adoption is growing across all industries.

What is RPAM?

Remote Privileged Access Management (RPAM) allows organizations to securely monitor and manage privileged access for remote and third-party users. Unlike traditional PAM solutions, RPAM extends granular access controls beyond the corporate perimeter, enabling administrators, contractors and vendors to connect securely from any location.

RPAM enforces least-privilege access, verifies user identities and monitors every privileged session, all without exposing credentials or depending on Virtual Private Networks (VPNs). Each privileged session is recorded in detail, giving security teams full visibility into who accessed what and when.

How does PAM differ from RPAM?

Both PAM and RPAM help organizations secure privileged access, but they were built for different operational environments. Traditional PAM solutions are designed to monitor and manage privileged accounts within an organization’s internal network. Since they were designed for on-prem environments, legacy PAM solutions struggle to keep up with today’s distributed, cloud-based infrastructures.

RPAM, on the other hand, extends PAM capabilities to modern hybrid and remote environments, providing secure privileged access regardless of a user’s location. In contrast to traditional PAM solutions, RPAM offers secure remote access without requiring VPNs or agent-based deployments, improving scalability and reducing attack surfaces. By supporting zero-trust principles and cloud-native architectures, RPAM gives organizations the control and flexibility needed to protect privileged accounts across modern environments.

Why RPAM adoption is accelerating

Technology is advancing at such a rapid pace that organizations must accelerate the adoption of RPAM to keep up with the growing need for secure and flexible remote access. Here are the main reasons why RPAM adoption is accelerating so quickly.

Remote work demands strong access controls

With the steady rise of hybrid and remote work, organizations face increased access challenges beyond their corporate networks. Since employees, contractors and vendors require privileged access to critical systems from various locations and devices, organizations need RPAM to provide policy-based, Just-in-Time (JIT) access to eliminate standing privileges across distributed environments. RPAM ensures that every connection, whether from an internal IT admin or an external vendor, is authorized and monitored to maintain security and transparency.

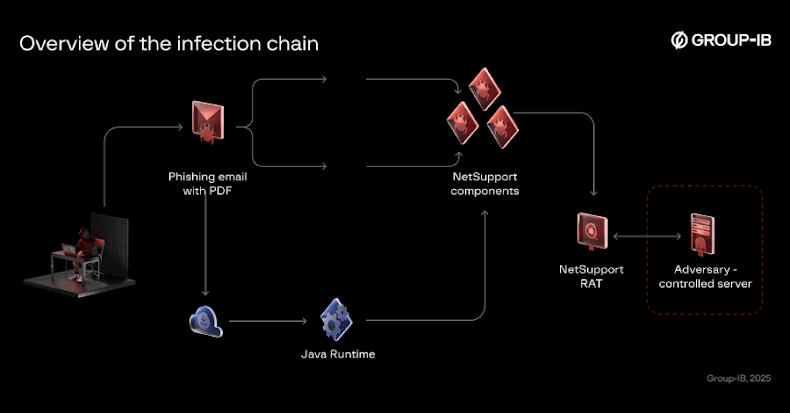

Cybercriminals target weak remote access points

Traditional remote access methods, including VPNs and Remote Desktop Protocol (RDP) sessions, are commonly targeted attack vectors. Once they have access to stolen credentials or remote systems, cybercriminals can deploy ransomware, steal data or move laterally within an organization’s network. RPAM mitigates these risks by enforcing Multi-Factor Authentication (MFA), recording privileged sessions and supporting zero-trust security. RPAM eliminates the use of shared credentials, ensuring that only continuously verified users can access sensitive data.

Compliance requirements drive automation

Organizations must comply with a variety of regulatory frameworks, such as ISO 27001 and HIPAA, which require full visibility into privileged activities. RPAM improves security and compliance by automating session logging and recording detailed audit trails. Not only does RPAM streamline audits, but it also provides organizations with valuable insight into privileged activity, helping ensure they align with compliance requirements.

The future of privileged access management

As remote work and cloud environments continue to modernize enterprises, traditional PAM solutions must evolve to meet the demands of remote access. The future of PAM lies in RPAM solutions that deliver secure, cloud-native control over privileged access across distributed networks. RPAM capabilities, such as agentic AI threat detection, can help organizations identify suspicious activity and proactively prevent potential data breaches before they happen. Modern organizations must shift toward solutions that offer zero-trust architectures, ensuring each access request is authenticated and continuously validated. KeeperPAM® offers a scalable, cloud-native RPAM solution that enables enterprises to secure privileged access and maintain compliance, regardless of where their users are located.

Source: thehackernews.com…