Cybersecurity researchers have charted the evolution of XWorm malware, turning it into a versatile tool for supporting a wide range of malicious actions on compromised hosts.

“XWorm’s modular design is built around a core client and an array of specialized components known as plugins,” Trellix researchers Niranjan Hegde and Sijo Jacob said in an analysis published last week. “These plugins are essentially additional payloads designed to carry out specific harmful actions once the core malware is active.”

XWorm, first observed in 2022 and linked to a threat actor named EvilCoder, is a Swiss Army knife of malware that can facilitate data theft, keylogging, screen capture, persistence, and even ransomware operations. It’s primarily propagated via phishing emails and bogus sites advertising malicious ScreenConnect installers.

Some of the other tools advertised by the developer include a .NET-based malware builder, a remote access trojan called XBinder, and a program that can bypass User Account Control (UAC) restrictions on Windows systems. In recent years, the development of XWorm has been led by an online persona called XCoder.

In a report published last month, Trellix detailed shifting XWorm infection chains that have used Windows shortcut (LNK) files distributed via phishing emails to execute PowerShell commands that drop a harmless TXT file and a deceptive executable masquerading as Discord, which then ultimately launches the malware.

XWorm incorporates various anti-analysis and anti-evasion mechanisms to check for tell-tale signs of a virtualized environment, and if so, immediately cease its execution. The malware’s modularity means various commands can be issued from an external server to perform actions like shutting down or restarting the system, downloading files, opening URLs, and initiating DDoS attacks.

“This rapid evolution of XWorm within the threat landscape, and its current prevalence, highlights the critical importance of robust security measures to combat ever-changing threats,” the company noted.

XWorm’s operations have also witnessed their share of setbacks over the past year, the most important being XCoder’s decision to delete their Telegram account abruptly in the second half of 2024, leaving the future of the tool in limbo. Since then, however, threat actors have been observed distributing a cracked version of XWorm version 5.6 that contained malware to infect other threat actors who may end up downloading it.

This included attempts made by an unknown threat actor to trick script kiddies into downloading a trojanized version of the XWorm RAT builder via GitHub repositories, file-sharing services, Telegram channels, and YouTube videos to compromise over 18,459 devices globally.

This has been complemented by attackers distributing modified versions of XWorm – one of which is a Chinese variant codenamed XSPY – as well as the discovery of a remote code execution (RCE) vulnerability in the malware that allows attackers with the command-and-control (C2) encryption key to execute arbitrary code.

While the apparent abandonment of XWorm by XCoder raised the possibility that the project was “closed for good,” Trellix said it spotted a threat actor named XCoderTools offering XWorm 6.0 on cybercrime forums on Jun 4, 2025, for $500 for lifetime access, describing it as a “fully re-coded” version with fix for the aforementioned RCE flaw. It’s currently not known if the latest version is the work of the same developer or someone else capitalizing on the malware’s reputation.

Campaigns distributing XWorm 6.0 in the wild have used malicious JavaScript files in phishing emails that, when opened, display a decoy PDF document, while, in the background, PowerShell code is executed to inject the malware into a legitimate Windows process like RegSvcs.exe without raising any attention.

XWorm V6.0 is designed to connect to its C2 server at 94.159.113[.]64 on port 4411 and supports a command called “plugin” to run more than 35 DLL payloads on the infected host’s memory and carry out various tasks.

“When the C2 server sends the command ‘plugin,’ it includes the SHA-256 hash of the plugin DLL file and the arguments for its invocation,” Trellix explained. “The client then uses the hash to check if the plugin has been previously received. If the key is not found, the client sends a ‘sendplugin’ command to the C2 server, along with the hash.”

“The C2 server then responds with the command’savePlugin’ along with a base64 encoded string containing the plugin and SHA-256 hash. Upon receiving and decoding the plugin, the client loads the plugin into the memory.”

Some of the supported plugins in XWorm 6.x (6.0, 6.4, and 6.5) are listed below –

- RemoteDesktop.dll, to create a remote session to interact with the victim’s machine.

- WindowsUpdate.dll, Stealer.dll, Recovery.dll, merged.dll, Chromium.dll, and SystemCheck.Merged.dll, to steal the victim’s data, such as Windows product keys, Wi-Fi passwords, and stored credentials from web browsers (bypassing Chrome’s app-bound encryption) and other applications like FileZilla, Discord, Telegram, and MetaMask

- FileManager.dll, to facilitate filesystem access and manipulation capabilities to the operator

- Shell.dll, to execute system commands sent by the operator in a hidden cmd.exe process.

- Informations.dll, to gather system information about the victim’s machine.

- Webcam.dll, to record the victim and to verify if an infected machine is real

- TCPConnections.dll, ActiveWindows.dll, and StartupManager.dll, to send a list of active TCP connections, active windows, and startup programs, respectively, to the C2 server

- Ransomware.dll, to encrypt and decrypt files and extort users for a cryptocurrency ransom (shares code overlaps with NoCry ransomware)

- Rootkit.dll, to install a modified r77 rootkit

- ResetSurvival.dll, to survive device reset through Windows Registry modifications

XWorm 6.0 infections, besides dropping custom tools, have also served as a conduit for other malware families such as DarkCloud Stealer, Hworm (VBS-based RAT), Snake KeyLogger, Coin Miner, Pure Malware, ShadowSniff Stealer (open-source Rust stealer), Phantom Stealer, Phemedrone Stealer, and Remcos RAT.

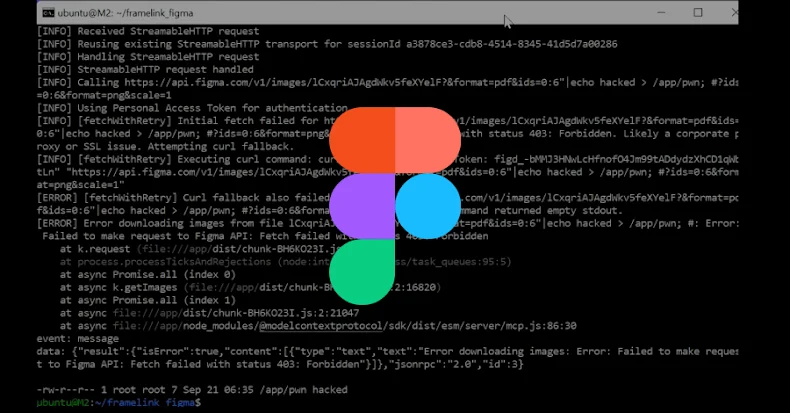

“Further investigation of the DLL file revealed multiple XWorm V6.0 Builders on VirusTotal that are themselves infected with XWorm malware, suggesting that an XWorm RAT operator has been compromised by XWorm malware!,” Trellix said.

“The unexpected return of XWorm V6, armed with a versatile array of plugins for everything from keylogging and credential theft to ransomware, serves as a powerful reminder that no malware threat is ever truly gone.”